- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Data Interoperability

- :

- ArcGIS Data Interoperability Blog

- :

- Use an ArcGIS Pro notebook to mirror external data...

Use an ArcGIS Pro notebook to mirror external data to feature services

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

One of the hidden gems in ArcGIS Pro is an ability to reach out to external geographic data sources using simple scripted workflows, which you can optionally automate.

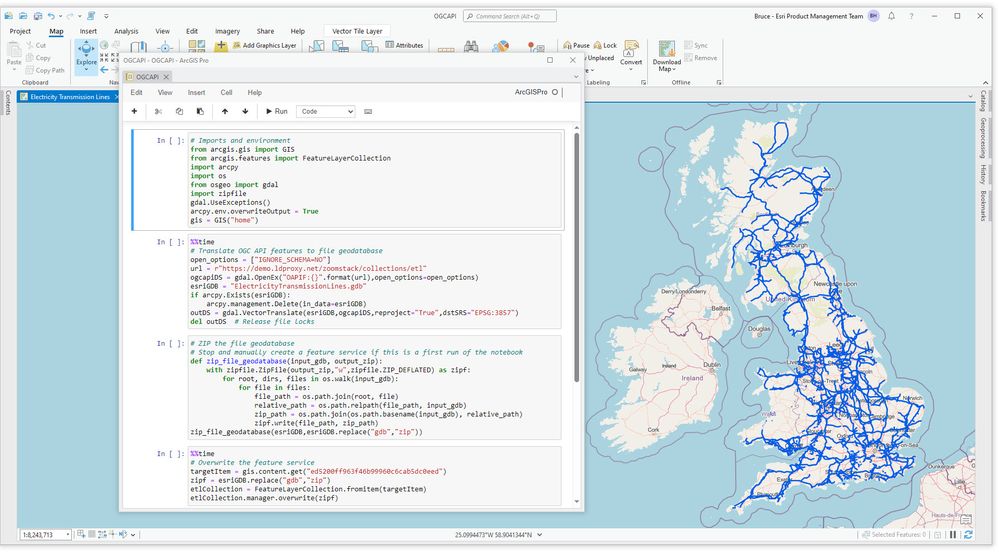

Here is today's subject matter, an OGC API Features layer mirrored to a hosted feature layer in ArcGIS Online, and the notebook that does the job:

The secret sauce in my cooking is GDAL - the Geographic Data Abstraction Library. Esri ship GDAL in the standard conda environment in ArcGIS Pro, here is the package description:

GDAL is a translator library for raster and vector geospatial data formats that is released under an X/MIT style Open Source license by the Open Source Geospatial Foundation. As a library, it presents a single raster abstract data model and vector abstract data model to the calling application for all supported formats.

While you can go deep with GDAL, in the way I'm using it here it's "copy-paste" ETL with a small T, I didn't do any feature level transformation. All you have to know is where your source data is, what format it is, and then plug it into the notebook. I count 84 vector formats in the supported vector drivers, so it isn't ArcGIS Data Interoperability but it's a useful subset. Datasets can be files or at HTTP, FTP or even S3 URLs.

My example uses OGC API Features, but this isn't important, GDAL abstracts the concept of a dataset, so you can use the same code for any format.

However, on the topic of OGC API Features, the format supports the concept of a landing page, collections and items, and you can supply a URL to any of these endpoints from where you navigate to them in a browser. I imported the electricity transmission lines collection from this landing page.

I'll let you walk through the notebook (in the blog download), but in summary it converts external data to file geodatabase then overwrites a feature service with the data. To create the feature service in the first place, pause at the cell that creates a zip file, upload and publish it, then use the item ID to set your target. Do not create the feature service from map layers, the system requires a file-based layer definition.

Run the notebook on any frequency that suits you, or turn it into a Python script tool and schedule it. Make sure you inject the right format short name ahead of your data path.

There you have it, simple maintenance of a hosted feature service sourced from external data! It isn't real data virtualization, as the data is moved, but it is an easy way to make data available to all.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.