- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS GeoEvent Server

- :

- ArcGIS GeoEvent Server Questions

- :

- Add a Feature and Update a Feature outputs: error ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Add a Feature and Update a Feature outputs: error with date fields

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've created a "Add A Feature", a "Update a Feature" and a "Publish text to a TCP Socket" outputs and I've created a GeoEvent Service that uses these outputs and that produces a GeoEvent with a date field. When the GE Service produces events, the "Publish text to a TCP Socket" output shows the correct date in the date field, but the "Add A Feature" and the "Update a Feature" outputs insert in the date field of the corresponding FeatureClass a date equals to the correct date minus one hour.

For example, "Publish text to a TCP Socket" output shows "01/19/2014 12:23:17 PM" and the "Add A Feature" and the "Update a Feature" outputs insert "01/19/2014 11:23:17".

What's happening?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you using the $ReceivedTime from the input for the dates streaming to your outputs? That date would be Universal Time. What time zone are you in? Maybe the TCP output is converting to your local time zone?

DG

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Luis,

Are you using the $ReceivedTime from the input for the dates streaming to your outputs? That date would be Universal Time. What time zone are you in? Maybe the TCP output is converting to your local time zone?

DG

No, I'm using the system time of my computer and the TCP output is showing the system time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What you are observing is by design. The adapter used with the TCP/Text Output connector reports date/time values using your local system's locale. I'm in the Pacific Time Zone (California, USA) and using the GeoEvent Simulator's feature to 'Set value to Current Time' I can insert the local system date/time into events as they are sent into GeoEvent Processor and observe "local time" values displayed as text in either a CSV system file or as text via a TCP Socket.

- When I output the event data to a TCP socket using the 'Publish text to a TCP Socket' Output connector, and use the use the TCP Console application to observe a text representation of the data, I see my local system's date/time. The 'Text' adapter has converted the date/time object within the GeoEvent to a text representation.

- When I output the event data to an ArcGIS Feature Service, the GeoEvent's date/time object is handled by the JSON/FeatureService adapter and transport. The date/time representation in the feature service is a Long Integer recording the number of milliseconds since midnight 1/1/1970 UTC.

If you query the REST endpoint of your feature service to obtain this Long Integer value and use a conversion utility such as http://www.epochconverter.com/ you should find that the value obtained from the feature service can be converted to a GMT value as well as offset to your local time zone.

It's all about what client you are using to view a representation of the date/time, and how that client is converting the event data it receives in order to display it for you.

Hope this information helps -

RJ

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RJ,

I have a "Receive text from TCP Socket" input, "Add a feature", "Update a Feature", "Publish text to a TCP Socket" and "Write to a .csv file" output.

The GeoEvent Definition is expecting an attribute type of "Date"

The TCP data feed is in UTC.

Sample: 2014-03-26 15:03:15.15

I set the "Expected Date Format:" in the "Receive text from a TCP SOcket" to yyyy-MM-dd HH:mm:ss.s

When processed through the GeoEvent Simulator (I am not setting the simulator to 'Set value to Current Time'), the TCP Console displays 03/26/2014 03:03:15 PM. The time field appears to be digested and reformated.

The "Add a feature" and "Update a Feature" outputs store the data in SQL databases. The time field when viewed in ArcMap Attribute table is 3/26/2014 9:03:15 PM.

Yes, I am -6 time zone (MDT).

Any thoughts on how to avoid this apparent offset?

Lloyd

Desktop: Win7 x64

Servers: Windows Server R2 Enterprise x64

ArcGIS for Server 10.2.1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Inserted a Field Calculator to subtract 6 hours.

Expression: DATE_TIME-(6*1000*60*60)

Seems to be working. Hopefully the GeoEvent Processor development team will come up with something better.

Lloyd

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Lloyd -

Apologies for the delay in getting back with you. You have actually hit upon the best work around for the issue you are observing.

Since you are simulating event data using the GeoEvent Simulator, you could modify the CSV simulation file to include time zone information with the date/time value it is sending to GeoEvent Processor. For example:

- 2014-03-31 13:00:45.00 UTC

- 2014-03-31 13:00:45.00 PDT

- 2014-03-31 13:00:45.00

vs.

The Expected Date Format mask you specify as part of your TCP/Text input's configuration would need to anticipate the time zone information by adding a 'z' to the end of the mask.

- yyyy-MM-dd HH:mm:ss.S z

- yyyy-MM-dd HH:mm:ss.S

vs.

That will allow you to specify the underlying date/time value's time zone ... but won't address the different representations of the value you are seeing in the TCP Console application vs. the attribute value in the feature service. Let me try to explain...

The Publish text to a TCP Socket output relies on an outbound connector which in turn relies on a Text adapter for a TCP transport. The outbound connector for the Write to a .csv file uses a Text adapter for a File transport. In both cases, the text adapter is adapting the GeoEvent's date/time value to represent it as comma separated text for display. The adapter is implemented to offset the date/time to display it in your local time zone.

The Add a feature and Update a Feature outputs both rely on an outbound connector which uses a JSON adapter for a FeatureService transport. This adapter uses a Long Integer representation of the date/time value as milliseconds since Midnight 1/1/1970. A received date/time value 2014-03-31 13:00:45.00 UTC will be placed into the feature service as 1396270845000 without offset - since the date/time value is already in UTC.

- If your simulated data specified a time zone of UTC, the values in your feature service will match the simulated date/time value, but the TCP Console will offset the simulated value to display a date/time relative to your current time zone.

- If your simulated data specified a time zone of PDT, the value displayed in the TCP Console will match the simulated date/time value, but the value written into the feature service would be offset to UTC to adhere to the milliseconds since Midnight 1/1/1970 standard used by feature services.

.

When you design your GeoEvent Service, you will need to consider what client application will be used to retrieve and display the event data output from GeoEvent Processor. If the client will be displaying the UTC value from the feature service and is not going to offset the value to localtime, then you might want to consider using a Field Calculator to compute an explicit "LocalTime" value and configure the client to display the "LocalTime" rather than the date/time received with the event data.

- RJ

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RJ Sunderman,

The GEP Field Calculator seems to be a Good Workaround. However, in our case, the concerned Feature Class has 2 Date Fields: CREATEDDATE and MODIFIEDDATE

Can I use only 1 GEP Field Calculator Processor to include the Offset mechanism for both the Existing Date Fields?

- If yes, how should be the expression? (Give me an example like this: CREATEDDATE + 10800000 , MODIFIEDDATE + 10800000 [NOTE: We are in Kuwait, which is GMT + 3] )

- If No, how should I proceed?

Thanks,

Richardson

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Richardson -

If you have two fields in your GeoEvent that you want to offset from UTC to Localtime, you will need to use two Field Calculator processors.

For example, consider the following input (generic JSON which can be sent to GeoEvent via HTTP / POST):

[

{

"SensorID": "BZQT-5480-A",

"SensorValue": 53.2,

"ReportedDT": "2015-09-23 14:06:22.6 UTC",

"CalibrationDate": "2015-07-01 00:00:00.0 UTC"

}

]I configured a 'Receive JSON on a REST Endpoint' input with an 'Expected Date Format' property value: yyyy-MM-dd HH:mm:ss.S z

The input is now configured to handle the data provider's specific string representation of a date/time, expecting the string value to include the time zone specification (in this case, UTC).

If I wanted a client to display date/times as localtime, and the client wasn't configured (or able) to offset the UTC values for me, I would want to create two additional event fields - one to hold the "Reported Date" in localtime and one to hold the "CalibrationDate" in localtime. I suggest this because deliberately falsifying the actual UTC date/time values by overwriting them with computed offsets is bad practice.

So, I copy the GeoEvent Definition used by the input to create a new event definition, and add the needed fields to the new event definition. Then I use a Field Mapper to map the received data into the new schema, leaving the two localtime fields unmapped. I now have a GeoEvent with two empty field to which I can write calculated values (and I don't have to deal with the Field Calculator dynamically creating managed GeoEvent Definitions for me).

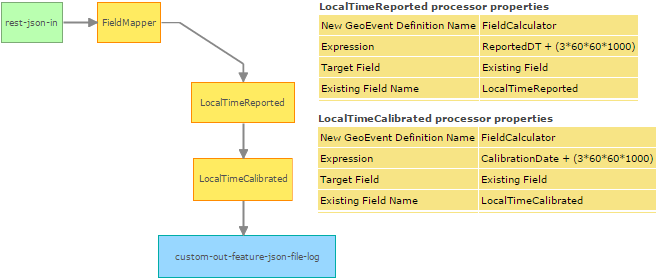

Here's my resulting GeoEvent Service, showing the configuration of each Field Calculator. In this case, you indicated that you wanted to shift the UTC values forward three (3) hours to Kuwait localtime, so I add a three hour equivalent number of milliseconds (3 hrs x 60 min/hr x 60 sec/min x 1000 ms/sec) to each original date/time value, instructing the Field Calculators to write their values into the prepared existing fields.

The output, in Esri Feature JSON format would look something like this:

[

{

"attributes": {

"SensorID": "BZQT-5480-A",

"SensorValue": 53.2,

"ReportedDT": 1443017182006,

"CalibrationDate": 1435708800000,

"LocalTimeReported": 1443027982006,

"LocalTimeCalibrated": 1435719600000

}

}

]Notice that we've preserved the "ReportedDT" and "CalibrationDate" values, reported by the sensor. Combining the Field Mapper with the Field Calculators we've effectively enhanced the sensor data to include reported and calibrated date/time values - offset to Kuwait / Riyadh localtime. You can use online utilities such as EpochConverter to convert the epoch millisecond values to a human readable date/time.

Hope this information helps -

RJ

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RJ,

Thanks a lot. This worked for me. In my case, the Input date and time is in Local Time, so I am not using the Field Mapper. I just used 2 Field Calculators and I am getting the desired output which we needed.

Enjoy your day.

Regards,

Richardson