- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Enterprise

- :

- ArcGIS Enterprise Questions

- :

- Re: Large ArcGIS Server 'Site': stability issues

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Large ArcGIS Server 'Site': stability issues

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have an existing ArcGIS Server (AGS) 10.0 solution that is hosting close to 1,000 mapping services. We have been working on an upgrade to this environment to 10.2.1 for a few months now and we are having a hard time getting a stable environment. These services have light use, and our program requirements are to have an environment that can handle large amounts of services with little use. In the AGS 10.0 space we would set all services to 'low' isolation with 8 threads/instance. We also had 90% of our services set to 0 min instances/node to save on memory. Below is a summary of our approaches and where we are today. I'm posting this to the community for information, and I am really interested in some feedback and or recommendations to make this move forward for our organization.

Background on deployment:

- Targeted ArcGIS Server 10.2.1

- Config-store/directories are hosted on a clustered file server (active/passive) and presented as a share: \\servername\share

- Web-tier authentication

- 1 web-adaptor with anonymous access

- 1 web-adaptor with authenticated access (Integrated Windows Authentication with Kerberos and/or NTLM providers)

- 1 web-adaptor 'internally' with authenticated access and administrative access enabled (use this for publishing)

- User-store: Windows Domain

- Role-store: Built-In

We have a few arcgis server deployments that look just like this and are all running fairly stable and with decent performance.

Approach 1: Try to mirror (as close as possible) our 10.0 deployment methodology 1:1

- Build 4 AGS 10.2.1 nodes (virtual machines).

- Build 4 individual clusters & add 1 machine to each cluster

- Deploy 25% of the services to each cluster. The AGS Nodes were initially spec'd with 4 CPU cores and 16GB of RAM.

- Each ArcSOC.exe seems to consume anywhere from 100-125MB of RAM (sometimes up to 150 or as low as 70).

- Publishing 10% of the services with 1 min instance (and the other 90 to 0 min instances) would leaving around 25 ArcSOC.exe on each server when idle.

- The 16GB of RAM could host a total of 100-125 total instances leaving some room for services to startup instances when needed and scale slightly when in use.

our first problem we ran into was publishing services with 0 instances/node. Esri confirmed 2 'bugs':

#NIM100965 GLOCK files in arcgisserver\config-store\lock folder become frozen when stop/start a service from admin with 0 minimum instances and refreshing the wsdl site

#NIM100306 : In ArcGIS Server 10.2.1, service with 'Minimum Instances' parameter set to 0 gets published with errors on a non-Default cluster

So... that required us to publish all of our services with at least 1 min instance per node. At 1,000 services that means we needed 100-125GB of ram for all the ArcSOC.exe processes running without any future room for growth....

Approach 2: Double the RAM on the AGS Nodes

- We added an additional 16GB of RAM to each AGS node (they now have 32GB of RAM) which should host 200-250 arcsoc.exe (which is tight to host all 1,000 services).

- We published about half of the services (around 500) and started seeing some major stability issues.

- During our publishing workflow... the clustered file server would crash.

- This file server hosts the config-store/directories for about 4 different *PRODUCTION* arcgis server sites.

- It also hosts our citrix users work spaces and about 13TB of raster data.

- During a crash, it would fail-over to the passive file server and after about 5 minutes the secondary file server would crash.

- This is considered a major outage!

- On the last crash, some of the config-store was corrupted. While trying to login to the 'admin' or 'manager' end-points, we received an error that had some sort of parsing issue. I cannot find the exact error message. We had disabled the primary site admin account, so went in to re-enable, but the super.json file was EMPTY! We had our backup team restore the entire config-store from the previous day, and copied over the file. I'm not sure what else was corrupted. after restoring that file we were able to login again with our AD accounts.

- During our publishing workflow... the clustered file server would crash.

The file-server crash was clearly caused by publishing a large amounts of services to this new arcgis server environment. We caused our clustered file servers to crash 3 separate times all during this publishing workflow. We had no choice but to isolate this config-store/directories to an alternate location. We moved it to a small web-server to see if we could simulate the crashes there and continue moving forward. So far it has not crashed that server since.

During bootups, with the AGS node hosting all the services, the service startup time was consistently between 20 and 25 minutes. We were able to find a start-up timeout setting at each service that was set to 300 seconds (5 minutes) by default. we set that to 1800 seconds (30 minutes) to try and get these machines to start-up properly. What was happening is that all the arcsoc.exe processes would build and build until some point they would all start disappearing.

In the meantime, we also reviewed the ArcGIS 10.2.2 Issues Addressed List which indicated:

NIM099289 Performance degradation in ArcGIS Server when the location of the configuration store is set to a network shared location (UNC).

We asked our Esri contacts for more information regarding this bug fix and basically got this:

…our product lead did provide the following as to what updates we made to address the following areas of concern listed inNIM099289:

- 1. The Services Directory

- 2. Server Manger

- 3. Publishing/restarting services

- 4. Desktop

- 5. Diagnostics

ArcGIS Server was slow generating a list of services in multiple places in the software. Before this change, ArcGIS Server would read from disk all services in a folder every time the a list of services was needed - this happened in the services directory, the manager, ArcCatalog, etc. This is normally not that bad, but if you have many many services in a folder, and you have a high number of requests, and your UNC/network is not the fastest, then this can become very slow. Instead we remember the services in a folder and only update our memory when they have changed.

Approach 3: Upgrade to 10.2.2 and add 3 more servers

- We added 3 more servers to the 'site' (all 4CPU, 32GB RAM) and upgraded all to 10.2.2. We actually re-built all the machines from scratch again

- We threw away our existing 'config-store' and directories since we knew at least 1 file was corrupt. We essentially started from square 1 again.

- All AGS nodes were installed with a fresh install of 10.2.2 (confirmed that refreshing folders from REST page were much faster).

- Config-store still hosted on web-server

- We mapped our config-store to a DFS location so that we could move it around later

- Published all 1,000 ish services successfully with across 7 separate 'clusters'

- Changed all isolation back to 'high' for the time being.

This is the closest we have gotten. At least all services are published. Unfortunately it is not very stable. We continually receive a lot of errors, here is a brief summary:

Level Message Source Code Process Thread SEVERE Instance of the service '<FOLDER>/<SERVICE>.MapServer' crashed. Please see if an error report was generated in 'C:\arcgisserver\logs\SERVERNAME.DOMAINNAME\errorreports'. To send an error report to Esri, compose an e-mail to ArcGISErrorReport@esri.com and attach the error report file. Server 8252 440 1 SEVERE The primary site administrator '<PSA NAME>' exceeded the maximum number of failed login attempts allowed by ArcGIS Server and has been locked out of the system. Admin 7123 3720 1 SEVERE ServiceCatalog failed to process request. AutomationException: 0xc00cee3a - Server 8259 3136 3373 SEVERE Error while processing catalog request. AutomationException: null Server 7802 3568 17 SEVERE Failed to return security configuration. Another administrative operation is currently accessing the store. Please try again later. Admin 6618 3812 56 SEVERE Failed to compute the privilege for the user 'f7h/12VDDd0QS2ZGGBFLFmTCK1pvuUP1ezvgfUMOPgY='. Another administrative operation is currently accessing the store. Please try again later. Admin 6617 3248 1 SEVERE Unable to instantiate class for xml schema type: CIMDEGeographicFeatureLayer <FOLDER>/<SERVICE>.MapServer 50000 49344 29764 SEVERE Invalid xml registry file: c:\program files\arcgis\server\bin\XmlSupport.dat <FOLDER>/<SERVICE>.MapServer 50001 49344 29764 SEVERE Unable to instantiate class for xml schema type: CIMGISProject <FOLDER>/<SERVICE>.MapServer 50000 49344 29764 SEVERE Invalid xml registry file: c:\program files\arcgis\server\bin\XmlSupport.dat <FOLDER>/<SERVICE>.MapServer 50001 49344 29764 SEVERE Unable to instantiate class for xml schema type: CIMDocumentInfo <FOLDER>/<SERVICE>.MapServer 50000 49344 29764 SEVERE Invalid xml registry file: c:\program files\arcgis\server\bin\XmlSupport.dat <FOLDER>/<SERVICE>.MapServer 50001 49344 29764 SEVERE Failed to initialize server object '<FOLDER>/<SERVICE>': 0x80043007: Server 8003 30832 17

Other observations:

- Each AGS node makes 1 connection (session) to the file-server containing the config-store/directories

- During idle times, only 35-55 files are actually open from that session.

- During bootups (and bulk administrative operations), the file's open jump consistently between 1,000 and 2,000 open files per session

- The 'system' process on the file server spikes especially during bulk administrative processes.

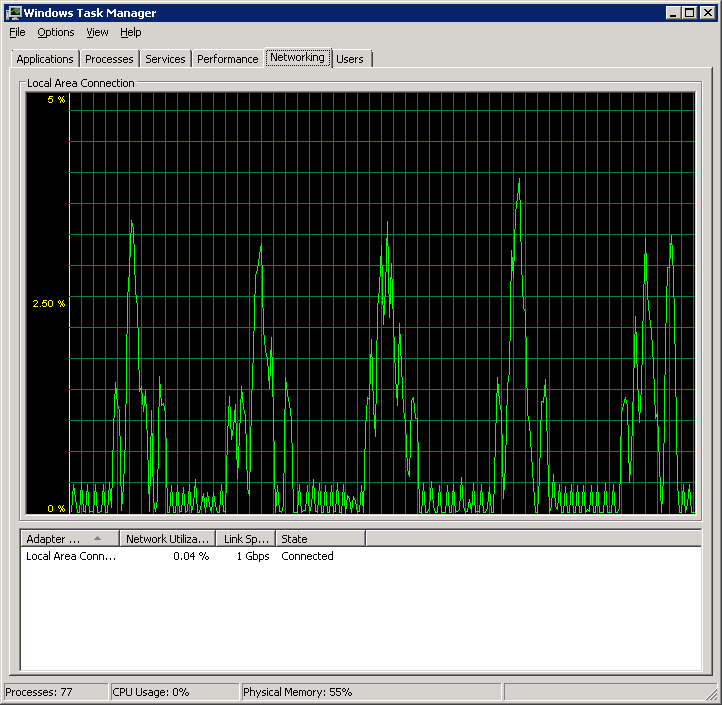

- The AGS nodes are consistently in communication with the file server (even when the site is idle). CPU/Memory and Network monitor on that looks like this:

- AGS nodes look similar. It seems there is a lot of 'chatter' when sitting idle.

- Requests to a service succeed 90% of the time but 10% of the time we receive HTTP 500 errors:

Error: Error exporting map

Code: 500

Options for the future

We have an existing site with the ArcGIS SOM instance name of 'arcgis'. These 1,000 services are running in that 10.0 site for the past few years. Users have interacted with this using a URL like: http://www.example.com/arcgis/rest/services/<FOLDER>/<MapService>/MapServer

We are trying to host all these same services so that users accessing this URL will be un-impacted. If we cannot, we will switch to 1 server in 1 cluster in 1 site (and instead have 7 sites). We will then be re-publishing all our content to individual sites but will have different URL's:

http://www.example.com/arcgis1/rest/services/<FOLDER>/<MapService>/MapServer

http://www.example.com/arcgis2/rest/services/<FOLDER>/<MapService>/MapServer

...

...

http://www.example.com/arcgisN/rest/services/<FOLDER>/<MapService>/MapServer

We would have extensive amount of work to either (or both) communicate all the new URL's to our end users (and update all metadata, products, documentation, and content management systems to point to the new URL's) and/or build URL Re-direct (or URL Re-write) rules for all the legacy services. Neither of two options are ideal, but right now we seem to have exhausted all other options.

Hopefully this will help other users while they troubleshoot thier arcserver deployment. Any ideas are greatly appreciated with our strategy to make this better. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Pat-

You all are a bit more advanced than us for sure. Thanks for the node info, great for my future reference. Actually, we too had major issues with the AD roles, didn't mean to imply otherwise. In fact, we found our AD policies too restrictive and reverted back to full gis-tier, but at your scale that would be a challange. Wish I could be of more help!

David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

David and Patrick:

So you both are saying you found AD to be unstable and went back to pure ArcGIS roles?

Are either of you using Portal (the in house one, not AGOL)?

Portal is suppose to play nice with AD and then push the security setting on down to secure servers.

This has been my plan for a new design and hopefully 10.3.1 is better with AD?

But if two large installations have jumped ship on using AD, it is something to consider!

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Paul, its not that AD was unstable for us, its that we in GIS don't have any control over our AD and thus it was difficult to manage effectively. For us, using GIS tier authentication gives us more fine grained control as we have fairly restrictive AD policies and so it didn't make much sense for us to try to mix AD with the bult in roles. Also, our AD roles just weren't set up with server in mind, but rather for pure internal R/W access to various shares, etc.

David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks David.

That makes a lot of sense.

In our scenario, I might be able to leverage AD.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Absolutely, an we would like to leverage AD as well. It's definitely a priority for us moving forward and I will certainly post back any info/results to this thread...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Patrick,

I have seen the resource exhaustion many times before. I ran into it when upgrading a site with 50+ map services on server 10.1, windows 2008 r2, 26GB ram, vmware, 2 servers on 1 cluster. Systems analysts swore that we didn't need more than 1GB of virtual memory. ESRI support couldn't provide an answer as to how much virtual memory was needed, just more. The best part was ESRI support said to let windows manage your virtual memory. Our Systems Analysts said no way in vmware, Ha! I would publish some maps and then the services would crash. Add another Gig of virtual memory and I could publish more services. Progress. We are now up to 5GB of virtual memory. The metric to watch is the commit in task manager on the performance tab. Once this ratio maxes out services will crash. For example, 29/44 ok. 44/44 crash. We now monitor the windows commit. Once this resource exhaustion error occurs, arcgis server is in a bad state and needs to be restarted. When this event occurs, it seems to happen on both servers in the cluster at the same time.

The best of my understanding is that Server is running java virtual machines and each jvm/map service requires virtual memory. Each map service that is published uses a little bit more. You can't run out of virtual memory.

Frank

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting stuff Frank.

One thing that gets confusing is talking about virtual memory.

Microsoft uses it to indicate Swap space, disk space acting as a place to hold RAM images (I know you know that, just putting it out there for clarification.)

So when you say you're up to 5GB of virtual memory, do you mean in your Windows Max Swap space size?

Or do you mean in your VMware allocation for the guest OS?

When we get into the virtual world where we're virtualizing servers, now virtual memory is something different.

It's now memory on the host being set aside for the virtual box's usage.

But the virtual box's guest OS, if Windows, is still doing it's own Virtual (swap) ram....

And I think most Hypervisors have their own method of doing Swap space so that if available physical ram is over allocated, swapping starts happening. In my experience, this causes virtual boxes to start to crawl.

So that's sort of a third class of virtual ram.

As you said, resource exhaustion is the bane of ArcServer. (or really, almost any situation)

I think in this day and age of cheap ram we should be trying to never have to use Swap space.

This can be much easier said than done unless you're lucky enough to have pretty much unlimited resources.

I've noticed substantial improvements in my local VM boxes by requiring they not swap out.

They allocate and receive their chunk of RAM and run or they don't get fired off.

Now this does not address the issue of Windows Virtual memory (swap space)

Again, though, if you allocate enough ram to begin with for the guest, then set the swap space to a low min, say 256MB, then you can run and then hope that the 256MB never grows. This means you're never going to swap space (or if you are it's small chunks < 256mb) and if it does grow, it can give you a good starting point for adding more actual ram (or actual virtual ram for the guest 😉

Besides watching the commit metric, one also wants to watch the Physical Memory %

When that number starts hitting >=80% (I think the magic number is 83%? ) Windows starts swapping big time.

There's quite a few articles out there on this.

Swapping is just bad for performance and all sorts of things. Especially on a server. Especially on a heavily loaded ArcServer with a lot of map services, the amount of disk thrashing that can go on can just paralyze a server.

I was just Goggling about the 80% number and came to realize that with the new flavors of Windows after XP, things have gotten a LOT more complicated at the OS level. As the first article states, "Windows memory management is rocket science"

Now add virtualization onto to this and ....

Here's an older article (2010 ) but worth the read from the perspective of trying to understand Virtual (swap) memory. And the link inside it to Russovich's (of SysInternals fame) 2008 article gets even deeper.

Windows 7 memory usage: What's the best way to measure? | ZDNet

They make some interesting comments about the Commit metric.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I say virtual memory, I'm talking about virtual memory in windows server, configured through the control panel. I have no access to the vmware configuration. We went had many discussions on why a windows server should have little to no virtual memory. While in theory, I agree. In practice it doesn't work in my case with arcgis server.

I was wrong about my current virtual memory allocation, it is actually 10GB. 5GB was barely enough and it was only semi-stable. More virtual memory and we are more stable. I'm not saying it is perfect but we have fewer crashes these days. Currently my servers are running with 64% physical memory and a commit of 29/44.

Does anyone know enough about java to explain why arcgis server uses OS virtual memory? Is it the java virtual machine that is requiring the virtual memory? Can we configure Java to not use virtual memory?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I spoke with a few different ESRI tech guys and one of the topics was virtual memory usage.

They told me that they have sent questions to the development team for an explination of services, processes and virtual memory without a reply. He said they are not sure, just that "it appears" the windows virtual memory is much more crucial in 10.1 and newer.

Sent via the Sa7sung Galaxy Note® 3, an AT&T 4G LTE smartphone

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Pat:

Would you be able to offload some of these services to AGOL to reduce the number of hosted mapservices you need to upgrade yourself?

Also, do you really need that many mapservices? Would you have the ability to re-architect your mapservices by combining layers and still achieve the same functionality?