- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Enterprise

- :

- ArcGIS Enterprise Questions

- :

- Re: Large ArcGIS Server 'Site': stability issues

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Large ArcGIS Server 'Site': stability issues

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have an existing ArcGIS Server (AGS) 10.0 solution that is hosting close to 1,000 mapping services. We have been working on an upgrade to this environment to 10.2.1 for a few months now and we are having a hard time getting a stable environment. These services have light use, and our program requirements are to have an environment that can handle large amounts of services with little use. In the AGS 10.0 space we would set all services to 'low' isolation with 8 threads/instance. We also had 90% of our services set to 0 min instances/node to save on memory. Below is a summary of our approaches and where we are today. I'm posting this to the community for information, and I am really interested in some feedback and or recommendations to make this move forward for our organization.

Background on deployment:

- Targeted ArcGIS Server 10.2.1

- Config-store/directories are hosted on a clustered file server (active/passive) and presented as a share: \\servername\share

- Web-tier authentication

- 1 web-adaptor with anonymous access

- 1 web-adaptor with authenticated access (Integrated Windows Authentication with Kerberos and/or NTLM providers)

- 1 web-adaptor 'internally' with authenticated access and administrative access enabled (use this for publishing)

- User-store: Windows Domain

- Role-store: Built-In

We have a few arcgis server deployments that look just like this and are all running fairly stable and with decent performance.

Approach 1: Try to mirror (as close as possible) our 10.0 deployment methodology 1:1

- Build 4 AGS 10.2.1 nodes (virtual machines).

- Build 4 individual clusters & add 1 machine to each cluster

- Deploy 25% of the services to each cluster. The AGS Nodes were initially spec'd with 4 CPU cores and 16GB of RAM.

- Each ArcSOC.exe seems to consume anywhere from 100-125MB of RAM (sometimes up to 150 or as low as 70).

- Publishing 10% of the services with 1 min instance (and the other 90 to 0 min instances) would leaving around 25 ArcSOC.exe on each server when idle.

- The 16GB of RAM could host a total of 100-125 total instances leaving some room for services to startup instances when needed and scale slightly when in use.

our first problem we ran into was publishing services with 0 instances/node. Esri confirmed 2 'bugs':

#NIM100965 GLOCK files in arcgisserver\config-store\lock folder become frozen when stop/start a service from admin with 0 minimum instances and refreshing the wsdl site

#NIM100306 : In ArcGIS Server 10.2.1, service with 'Minimum Instances' parameter set to 0 gets published with errors on a non-Default cluster

So... that required us to publish all of our services with at least 1 min instance per node. At 1,000 services that means we needed 100-125GB of ram for all the ArcSOC.exe processes running without any future room for growth....

Approach 2: Double the RAM on the AGS Nodes

- We added an additional 16GB of RAM to each AGS node (they now have 32GB of RAM) which should host 200-250 arcsoc.exe (which is tight to host all 1,000 services).

- We published about half of the services (around 500) and started seeing some major stability issues.

- During our publishing workflow... the clustered file server would crash.

- This file server hosts the config-store/directories for about 4 different *PRODUCTION* arcgis server sites.

- It also hosts our citrix users work spaces and about 13TB of raster data.

- During a crash, it would fail-over to the passive file server and after about 5 minutes the secondary file server would crash.

- This is considered a major outage!

- On the last crash, some of the config-store was corrupted. While trying to login to the 'admin' or 'manager' end-points, we received an error that had some sort of parsing issue. I cannot find the exact error message. We had disabled the primary site admin account, so went in to re-enable, but the super.json file was EMPTY! We had our backup team restore the entire config-store from the previous day, and copied over the file. I'm not sure what else was corrupted. after restoring that file we were able to login again with our AD accounts.

- During our publishing workflow... the clustered file server would crash.

The file-server crash was clearly caused by publishing a large amounts of services to this new arcgis server environment. We caused our clustered file servers to crash 3 separate times all during this publishing workflow. We had no choice but to isolate this config-store/directories to an alternate location. We moved it to a small web-server to see if we could simulate the crashes there and continue moving forward. So far it has not crashed that server since.

During bootups, with the AGS node hosting all the services, the service startup time was consistently between 20 and 25 minutes. We were able to find a start-up timeout setting at each service that was set to 300 seconds (5 minutes) by default. we set that to 1800 seconds (30 minutes) to try and get these machines to start-up properly. What was happening is that all the arcsoc.exe processes would build and build until some point they would all start disappearing.

In the meantime, we also reviewed the ArcGIS 10.2.2 Issues Addressed List which indicated:

NIM099289 Performance degradation in ArcGIS Server when the location of the configuration store is set to a network shared location (UNC).

We asked our Esri contacts for more information regarding this bug fix and basically got this:

…our product lead did provide the following as to what updates we made to address the following areas of concern listed inNIM099289:

- 1. The Services Directory

- 2. Server Manger

- 3. Publishing/restarting services

- 4. Desktop

- 5. Diagnostics

ArcGIS Server was slow generating a list of services in multiple places in the software. Before this change, ArcGIS Server would read from disk all services in a folder every time the a list of services was needed - this happened in the services directory, the manager, ArcCatalog, etc. This is normally not that bad, but if you have many many services in a folder, and you have a high number of requests, and your UNC/network is not the fastest, then this can become very slow. Instead we remember the services in a folder and only update our memory when they have changed.

Approach 3: Upgrade to 10.2.2 and add 3 more servers

- We added 3 more servers to the 'site' (all 4CPU, 32GB RAM) and upgraded all to 10.2.2. We actually re-built all the machines from scratch again

- We threw away our existing 'config-store' and directories since we knew at least 1 file was corrupt. We essentially started from square 1 again.

- All AGS nodes were installed with a fresh install of 10.2.2 (confirmed that refreshing folders from REST page were much faster).

- Config-store still hosted on web-server

- We mapped our config-store to a DFS location so that we could move it around later

- Published all 1,000 ish services successfully with across 7 separate 'clusters'

- Changed all isolation back to 'high' for the time being.

This is the closest we have gotten. At least all services are published. Unfortunately it is not very stable. We continually receive a lot of errors, here is a brief summary:

Level Message Source Code Process Thread SEVERE Instance of the service '<FOLDER>/<SERVICE>.MapServer' crashed. Please see if an error report was generated in 'C:\arcgisserver\logs\SERVERNAME.DOMAINNAME\errorreports'. To send an error report to Esri, compose an e-mail to ArcGISErrorReport@esri.com and attach the error report file. Server 8252 440 1 SEVERE The primary site administrator '<PSA NAME>' exceeded the maximum number of failed login attempts allowed by ArcGIS Server and has been locked out of the system. Admin 7123 3720 1 SEVERE ServiceCatalog failed to process request. AutomationException: 0xc00cee3a - Server 8259 3136 3373 SEVERE Error while processing catalog request. AutomationException: null Server 7802 3568 17 SEVERE Failed to return security configuration. Another administrative operation is currently accessing the store. Please try again later. Admin 6618 3812 56 SEVERE Failed to compute the privilege for the user 'f7h/12VDDd0QS2ZGGBFLFmTCK1pvuUP1ezvgfUMOPgY='. Another administrative operation is currently accessing the store. Please try again later. Admin 6617 3248 1 SEVERE Unable to instantiate class for xml schema type: CIMDEGeographicFeatureLayer <FOLDER>/<SERVICE>.MapServer 50000 49344 29764 SEVERE Invalid xml registry file: c:\program files\arcgis\server\bin\XmlSupport.dat <FOLDER>/<SERVICE>.MapServer 50001 49344 29764 SEVERE Unable to instantiate class for xml schema type: CIMGISProject <FOLDER>/<SERVICE>.MapServer 50000 49344 29764 SEVERE Invalid xml registry file: c:\program files\arcgis\server\bin\XmlSupport.dat <FOLDER>/<SERVICE>.MapServer 50001 49344 29764 SEVERE Unable to instantiate class for xml schema type: CIMDocumentInfo <FOLDER>/<SERVICE>.MapServer 50000 49344 29764 SEVERE Invalid xml registry file: c:\program files\arcgis\server\bin\XmlSupport.dat <FOLDER>/<SERVICE>.MapServer 50001 49344 29764 SEVERE Failed to initialize server object '<FOLDER>/<SERVICE>': 0x80043007: Server 8003 30832 17

Other observations:

- Each AGS node makes 1 connection (session) to the file-server containing the config-store/directories

- During idle times, only 35-55 files are actually open from that session.

- During bootups (and bulk administrative operations), the file's open jump consistently between 1,000 and 2,000 open files per session

- The 'system' process on the file server spikes especially during bulk administrative processes.

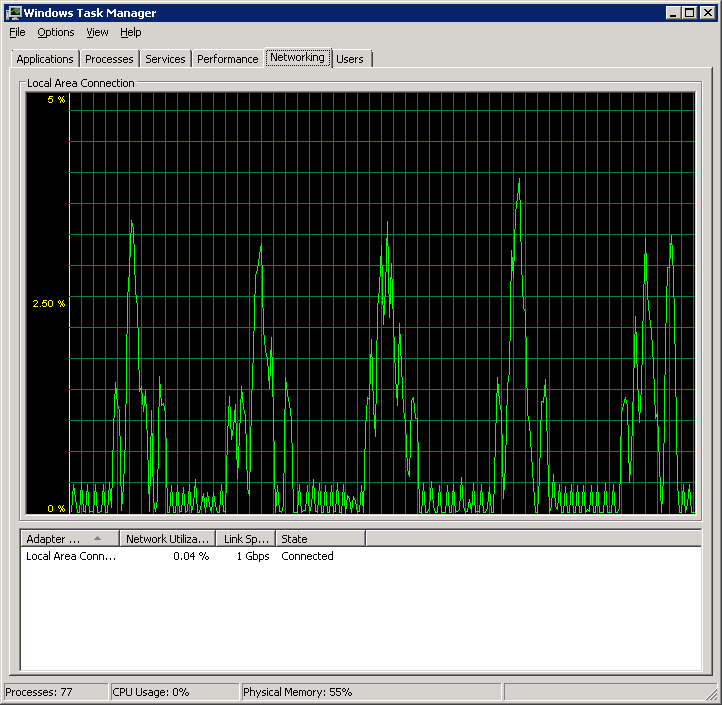

- The AGS nodes are consistently in communication with the file server (even when the site is idle). CPU/Memory and Network monitor on that looks like this:

- AGS nodes look similar. It seems there is a lot of 'chatter' when sitting idle.

- Requests to a service succeed 90% of the time but 10% of the time we receive HTTP 500 errors:

Error: Error exporting map

Code: 500

Options for the future

We have an existing site with the ArcGIS SOM instance name of 'arcgis'. These 1,000 services are running in that 10.0 site for the past few years. Users have interacted with this using a URL like: http://www.example.com/arcgis/rest/services/<FOLDER>/<MapService>/MapServer

We are trying to host all these same services so that users accessing this URL will be un-impacted. If we cannot, we will switch to 1 server in 1 cluster in 1 site (and instead have 7 sites). We will then be re-publishing all our content to individual sites but will have different URL's:

http://www.example.com/arcgis1/rest/services/<FOLDER>/<MapService>/MapServer

http://www.example.com/arcgis2/rest/services/<FOLDER>/<MapService>/MapServer

...

...

http://www.example.com/arcgisN/rest/services/<FOLDER>/<MapService>/MapServer

We would have extensive amount of work to either (or both) communicate all the new URL's to our end users (and update all metadata, products, documentation, and content management systems to point to the new URL's) and/or build URL Re-direct (or URL Re-write) rules for all the legacy services. Neither of two options are ideal, but right now we seem to have exhausted all other options.

Hopefully this will help other users while they troubleshoot thier arcserver deployment. Any ideas are greatly appreciated with our strategy to make this better. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Paul,

I am a much better GIS guy than IT guy but it was VMware ESX...not sure on the version. From what I remember there seemed to be a bigger VM overhead when compared to HyperV (and naturally the physical server alone).

The other issue that I found often was that with HyperV you were not permitted to allocate more resources to the VM than physical host had to offer. I often would see IT guys, take VMware, create a cluster with say .... 128GB RAM and 16 cores on the physical side, they would then "over-allocate"....basically creating a list of VMs that shared resources and if you were to total then number of RAM and CPU on the virtual servers it would exceed or far exceed the actual physical availability. They would argue and we would end up in that "we are IT so go away" environment. In the end, I have ended up with the belief that HyperV might be better than VMware for GIS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Many thanks Aaron.

That reduces my level of angst!

While over-allocating at the Hypervisor level is possible in ESXi:

virtualization - How is memory allocated in ESXi server? - Server Fault

I would stay as far away from that as I could, especially in an active environment.

However, maybe the OPs situation is a good example of where over-allocation makes sense?

1000 map services, most of which are rarely used but need to be available (if I recall, that's the situation.)

If you required actual required RAM for each map service (of what? 80-250MB per service) one could be looking at as much as 250 GB needed (or more I suppose) plus CPUs... All to host services not hit too often.

In that case, over-allocation would make sense to me but you'd sure want to monitor usage.

One advantage of VMware ESXi that I believe HyperV does not provide is that you can, on the fly, allocate more or reduce RAM and Cores to a virtual box as needed. Maybe the latest HyperV has started offering this? I'm not an HyperV guy at all. (makes me want to try taking a VMware virtual 2012R2 under VM and then HyperV inside that 😉

Sorry you had to experience that too common IT mode.. IT arrogance, like most arrogance, is based on insecurity and fear of ? Been seeing that for decades, and I'm often in IT... (more IS)

I think the conventional wisdom has been that VMware is what you need for large enterprise virtualizations. It is more robust, but has much higher costs (both $ and overhead) while HyperV has been better for smaller shops with lower needs and smaller budgets?

But apparently with win2012R2 srvr, HyperV has come closer to VMware in robustness and is cheaper. I'm reading articles now that are talking about combining the two. Especially when you need to save on licensing costs.

Personally, I love working in the virtual world from the standpoint of a developer and IT manager.

I think in 10 years, we will hardly recognize even our current virtual world.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry to open an old post but I've been experiencing issues with service disconnects as well.

Are you saying ESRI has put a threshold of 200~ services for their server?

We are hosting 181 services split between two clusters, both 4CPU 16GB RAM.

Figured we were hosting too many services at roughly 90 each.

But if ESRI is suggesting 200 each cluster than maybe we should look elsewhere to our issues?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ESRI told me no more than 200 services in a site. For that many services my guess out of the gate is that you are lacking resources. We have a separate web server and separate DB server. We have 60 services running on one app server and sit at about 35GB of 64GB RAM usage in the middle of the afternoon. Our site has been stable now for like a month. Here are some tips.

Make sure services are set to min1 and max of number of processor cores plus 1. At peak load, check RAM and CPU, if RAM is over like 70% consider adding more, look at Committed memory under task manager, performance, memory and make sure the numbers are not too close together. ESRI states that the new architecture seems to be more memory intensive. Make sure that the computer is set to System Managed on the virtual memory. Also, we have seen improvements when we increased (doubled) the Max Heap of SOC through server manager/admin. Lastly, we also seen improvements in publishing performance by creating user admin accounts for server manager...in users / roles through ArcGIS server manager instead of using one master admin/publishing account and sharing among users.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting. Thanks for the speedy response!!

We do have an admin account that is shared, but I am primarily the one publishing so I wonder if that would have any effect?

Also, we current have pooling at a min 1 instance and max 1 instance.

You suggest increasing the max instance to # of CPU + 1? So in our case set the max instance to 5 for each image service published?! Even though they are running at high isolation?

At this moment IT says our resources are ok, but I guess moving to 5 max instances would put a heavier load on CPU and RAM?

Sorry for all the questions. We are just trying anything at this point to fix this!

Also it takes time to change settings across 180~ services so we like to have notes on why we're going a particular thing before dedicating the day to administering and monitoring.

Thanks again for the help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes ESRI recommends the Min 1 and Max 5 given your set up. Then monitor it to see what services are being pegged at 5 in server manager...look at running and not in use. Also watch RAM too because it will creep up. What may be happening is if you are set to Min 1 and Max 1 and you have that many services, there is probably a huge request cue that is backing up. That will kill it. Its a delicate balance between CPU and RAM. The soc process are more RAM dependent but if you do not have enough of them available to service requests than your CPU and network will take a big hit hit. Also, if you have requests that are sitting in a request cue, this will affect the service stability and have a negative impact on the ability to publish or edit services too. If you were paying me for help I would say edit all services so 1 and 5, have IT on standby to add more resources. Once that is done and if all the things I mentioned above are done or checked off the list and its still messing up, call me...or ESRI. I would also ask why so many services. We did a study here where we took one map service with 8 layers and split it into 2 map services with 4 layers and we had zero performance gain. I would usually recommend having less services with more data if possible.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I completely agree on the number of services being limited. Unfortunately these services make up the LiDAR and derivative products for the counties in our state. The client wants our users to be able to open the REST Services page and access a particular county with all 5 derivative products visiable (slope, aspect, hillshade, DEM in meters and ft, and shaded relief.)

I've created raster function templates for each county so theoretically you could have one service for each county and nest 5 functions on the service when publishing and the user could apply function on the fly. However, the raster function template is an ESRI proprietary tool and our WMS clients are unable to utilize said functions.

SO sadly we need 180 services rather than 36... (I've added the .rft files to the DEMs anyways for our ESRI clients that would like to take advantage of that feature)

So 1 min and 5 max.

Keep at High Isolation or try Low Isolation with multiple threads?

Thanks again for your help.

This may be the first lead we've had in months to getting back on track with these heavy services.

We're planning to move to ArcServer 10.3.1 in the next few weeks, paired with two new servers that IT is setting up for us with Windows 2012/ QuadCPU/ 16GB RAM each. < Hopefully this will help...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. That makes sense. I don't envy you right now. lol. Yes, try that 1 and 5 and personally I would keep it set at high. What you may actually see....which I have seen prior, bumped up to max 9 and had like half of my services running at 9 most of the day so we added more CPU and RAM and upped it again to 13 and that resulted in like 1/3 running maxed out. Honestly, its very easy to under-estimate demand/load. You may see the same on your end. Don't be surprised if you are going back to IT and asking for more CPU and more RAM....you may have to adjust it a few times to get the best performance.

Remember to make sure the virtual memory is set to system managed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We figured resources were low regardless...

So would we be able to maintain 2 sites with 5 max instances? in # of processes, so if we have roughly 180 services x 5 max instances = 905 ArcSOC Processes?

We'd need 5 servers at 16GB of RAM and 4CPU each?

Or could we get by with 2 servers at increased RAM and CPU?

At this point it's a balance between resource costs and availability of products to WMS clients...

Adding 3 servers with high resources won't be cheap.

You've been a huge help.

Thanks again!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Remember to make sure the virtual memory is set to system managed."

I spoke with IT and they said "Right now we hard code that at 24 GB and set a separate disk partition (E:\) for the swap."

How critical is the virtual memory set to system managed?