- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Hub

- :

- ArcGIS Hub Questions

- :

- ArcGIS Hub/open data bug - duplicate records in ex...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ArcGIS Hub/open data bug - duplicate records in export public catalog (CSV), records count off?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

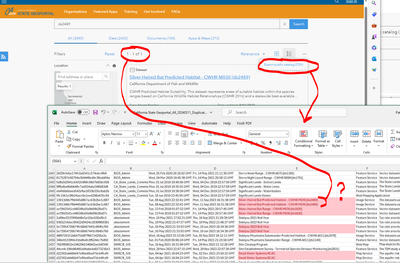

In the ArcGIS Hub/open data portals that our org shares data to, the "Export public catalog (CSV)" option produces a download with many duplicate records, and it appears the record count of this CSV, including duplicates, is what is shown as the number of records in the portal. The good news is that the duplicates seem to only be present in the CSV and don't appear to be discoverable by searching the portal (i.e. if there's 2 or more duplicate records in the CSV, only 1 is returned from a search in the portal), but the bad news is that this appears to be a bad bug that is not helpful to users wanting an accurate inventory download, and that portals seem to be using inaccurate count stats that include the duplicates? Please see attached graphic showing example from the California State Geoportal. I've also noticed this same behavior in our own Hub/open data portal (Hub standard). I'm guessing this may have something to do with duplicates being generated on the back end due to item updates, but are not reflected in live searches, but the CSV download is generated from the back end? We could open a case for this, but it appears to be affecting many organizations, so thought I'd report it here, thanks (P.S. years ago we experienced duplicate records issues with Hub that was related to the automated way we were updating records and needed Esri to help us fix that, but hopefully this current issue doesn't seem as complicated to fix?).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I saw this too and created a support ticket with Esri. Unfortunately the duplicates fixed itself and we couldn't figure out why. But it is unsettling that the public CSV doesn't represent the items in the content library.

I was also told to try a manual refresh of content to see if that makes the public catalog csv reflect whats actually in the library. See this other post: https://community.esri.com/t5/arcgis-hub-blog/how-to-manually-refresh-your-site-s-content/ba-p/88511...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info. One of our partner agencies also opened a case about this, but I haven't heard if there's been any resolution yet. I've seen these issues on at least 3 public Hub portals so far, and it's not just the duplicate records that are concerning but also the portal records count might be inaccurate because of the duplicates. If you tried the manual refresh, did it work for you? If that is a fix, I'm not sure we have time to manually refresh a hub all the time since our records are being updated all the time.. perhaps that could be an automated back-end thing as a future Hub bug fix that Esri implements?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did try the manual refresh once. But since the .csv reflected the items in the catalog I couldn't tell if the refresh would fix this issue or not.

I don't really understand the point of the manual refresh. I agree that it should be an automatic function of Hub. I asked during my support case and they said "they believe the catalog is gathered from a request to the hub site that references the API/indexer. The hope was that the manual refresh would fix this issue as it updates the indexer." But there isn't any documentation on it. I also couldn't find a python api to automate it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for posting this. We discovered we are having the same issue! It appears there are 17 duplicate entries in our catalogue ranging from Feature Services to Web Maps.

https://data.detroitmi.gov/search?collection=Dataset

I'm pretty sure we are going to have to submit an issue for the the one download issue we are facing. If so, I will bring this up too.