- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- arcpy.mapping replaceDataSource error message

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

arcpy.mapping replaceDataSource error message

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good day all,

I am trying to automate a process at my work, the script will start by updating the data source for a layer file (.lyr) found at the top of the contents pane in a map document.

The original data source for the layer file is a shapefile and I am trying to update it to a new shapefile found in a workspace/ folder I have assigned the variable "path" to (see screenshot below).

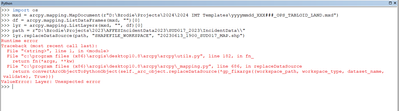

The following screenshot is, from my understanding, a simple way to write the code. I was using the python window in ArcMap for troubleshooting purposes:

As you can see I receive an error message.

I have tried writing this script several different ways, even omitting the map document and pointing straight to the layer file (arcpy.mapping.Layer([path])) but continue to get this error message.

The mapdocument is added ("mxd"), as is the dataframe ("df"), and the layer ("lyr").

Upon reading through past post regarding the .replaceDataSource function, I have seen I am not the first to receive this error message.

I am just starting to write my own scripts and apologize if I reffered to anything incorrectly.

Has anyone figured out how to get past/ correct the error?

Any help with this is greatly appreciated.

Here is the script as I have it written in an IDE, it's not complete but useful for context:

import arcpy, os

#Define variables

mxd = arcpy.mapping.MapDocument(r"D:\Brodie\Projects\2024\2024 IMT Templates\yyyymmdd_XXX###_OPS_TABLOID_LAND.mxd")

df = arcpy.mapping.ListDataFrames(mxd, "")[0]

lyr = arcpy.mapping.ListLayers(mxd, "", df)[0]

path = r"D:\Brodie\Projects\2023\AFFESIncidentData2023\SUD017_2023\IncidentData\\" #Path to new source data

nom = "20230613_1900_SUD017_MAP.shp" #Name of new source data

#Update data source for fire perimeter .lyr

lyr.replaceDataSource(path, "SHAPEFILE_WORKSPACE", nom)

lyr.name = "20230613_1900_SUD017_MAP" #Name of updated layer as it appears in the contents pane

#Save mxd under new name

mxd.saveACopy(r"D:\Brodie\Projects\2023\AFFESIncidentData2023\SUD017_2023\Projects\20230613_1900_SUD017_OPS_TABLOID_LAND.mxd")

print "Script complete"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I haven't done this in ArcMap, but your path doesn't look right. Try removing the two trailing back slashes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good afternoon,

Thank you for your response - I tried removing the two back slashes but am receiving the same error.

I added the script as it is written in an IDE to the original post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe use None for the wildcard parameter instead of an empty string.

df = arcpy.mapping.ListDataFrames(mxd, None)[0]

lyr = arcpy.mapping.ListLayers(mxd, None, df)[0]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good afternoon,

Again, I appreciate your insights and thoughts on a solution.

Unfortunately, I tried using None for the wildcard parameter and received the same error message.

I would do this in Pro but the organisation I work for is still using ArcMap for certain processes - so trying to get this to work in ArcMap/ Python 2.7.

Thanks again

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've used variations of this script:

Updating and fixing data sources with arcpy.mapping

import arcpy

mxd = arcpy.mapping.MapDocument(r"C:\Project\Project.mxd")

for lyr in arcpy.mapping.ListLayers(mxd):

if lyr.supports("DATASOURCE"):

if lyr.dataSource == r"C:\Project\Data\Parcels.gdb\MapIndex":

lyr.findAndReplaceWorkspacePath(r"Data", r"Data2")

mxd.saveACopy(r"C:\Project\Project2.mxd")

del mxd

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good morning Tom,

Thank you for your reply, I do not know if this will work as the name of the new data is not the same as the previous - the findAndReplaceWorkspacePath looks for data of the same name in a new path (from my understanding).

Also in line 6 - what are you setting the 2 "Data" variables to ?

Thanks again